Data Ingestion is the backbone of Salesforce Data Cloud. It ensures that customer data flows seamlessly from various sources like AWS, Salesforce Products, other CRMs, databases, etc. This process can fail due to various reasons, like API issues, schema mismatches, or connectivity problems. This will disrupt real-time analytics, customer profiles, and decision-making. To prevent such disruptions, we can set up automated email alerts for failures. In this post, you’ll learn how to configure email notifications for Salesforce Data Cloud ingestion failures, enabling your team to take immediate action and maintain data accuracy and continuity.

Use Case:

We have a data stream connected to the AWS S3 server for file ingestion. We want to set up an email notification for this ingestion failure.

We can use Salesforce Flow to automate email notifications in case of data ingestion failure. Let us see how to set it up in Salesforce Data Cloud Org.

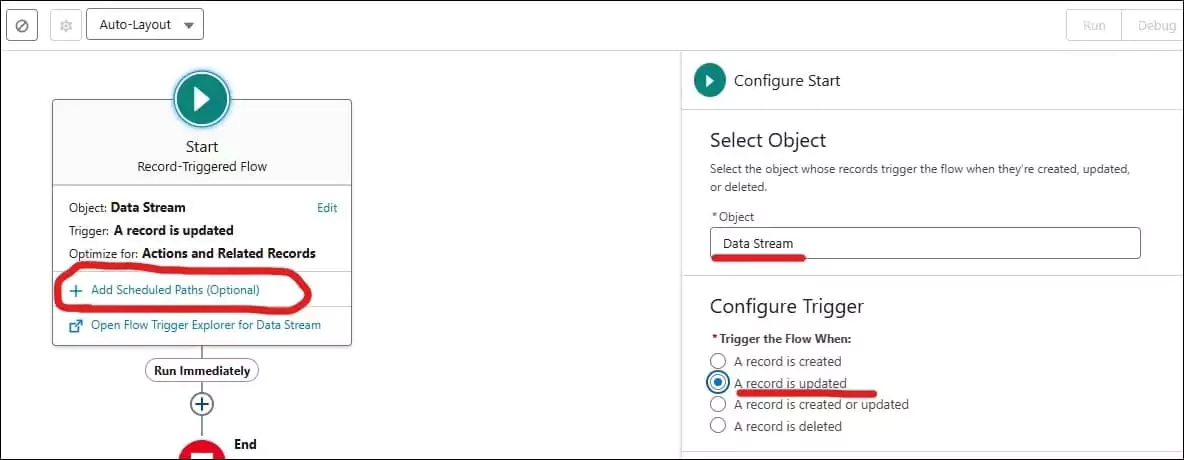

1. Create Salesforce Flow

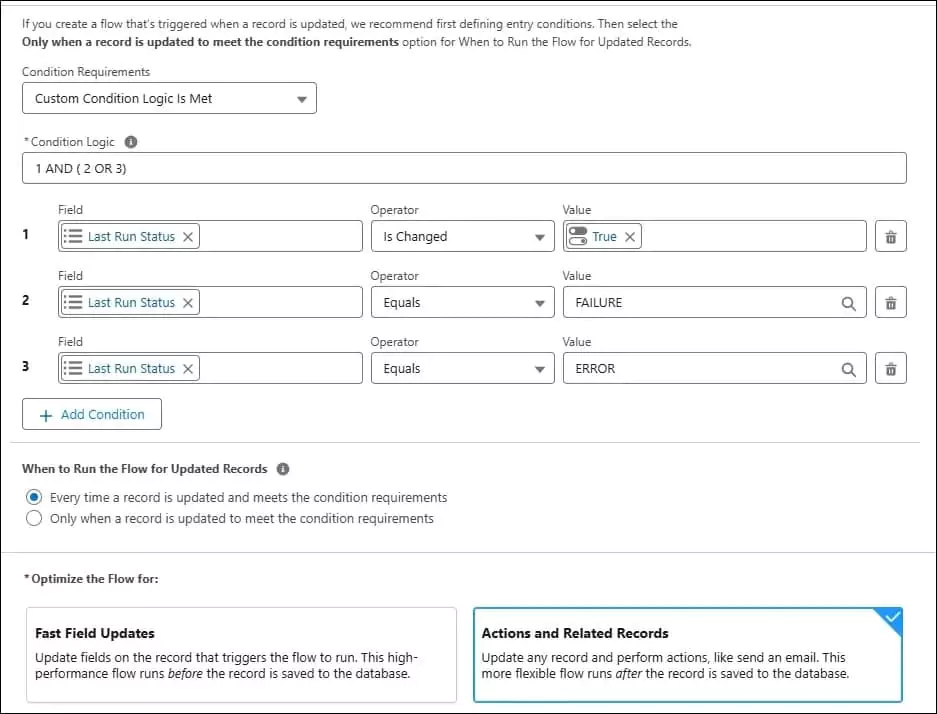

You can create a Salesforce Record Trigger Flow to automate email notification of data ingestion failure or errors. We will use the Data Stream object to handle the record trigger events.

We need to send an email notification in case of data ingestion failure/error, so we can put criteria to trigger flow execution. We will use Last Run Status field of the Data Stream object to identify ingestion failure.

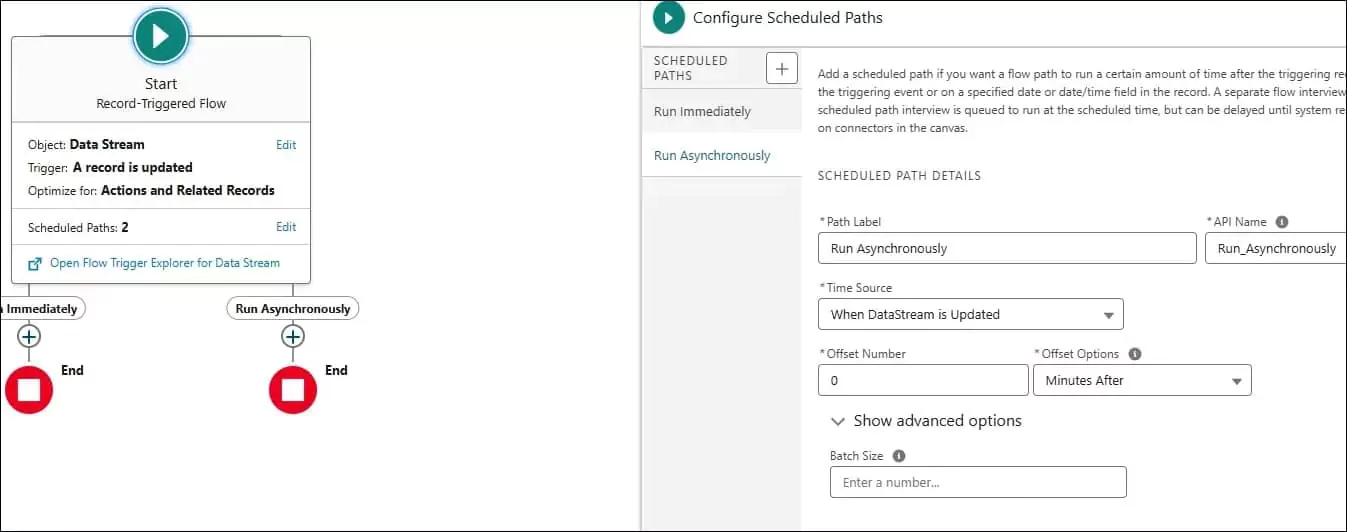

2. Add Schedule Path in Flow

We can send emails using Flow, but sometimes, due to setup and non-setup object updates, it can throw an error. So we can add a schedule path for asynchronous logic execution. You can try putting your logic in the synchronous path. If your logic works synchronously, then you can go ahead with normal flow execution otherwise, we can go with asynchronous logic execution.

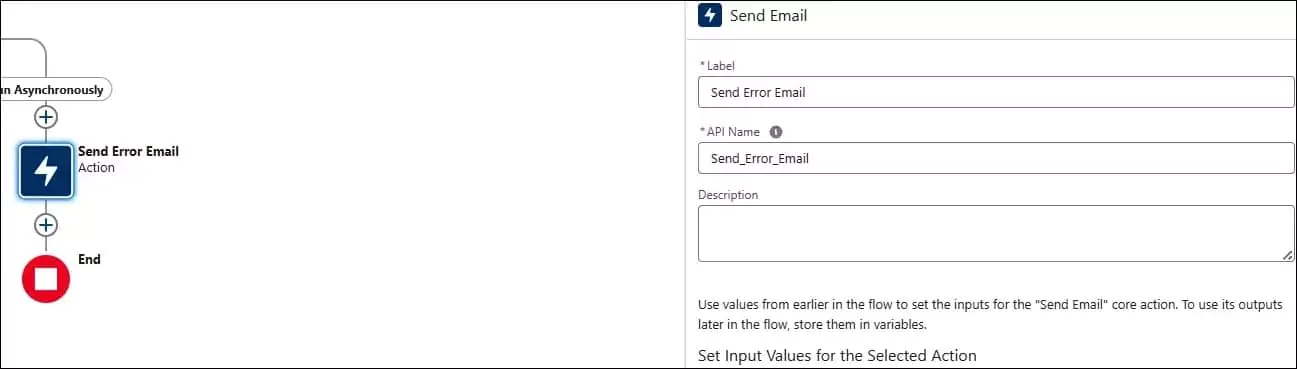

3. Send Email Alert

Add a Send Email action on the flow canvas to add email notification logic. You can use the required email for notification. It can be an admin or any other configured user.

We can send a notification for specific data ingestion failures, also. For this, we can add conditional logic before the Send Email node.

We can also send different emails for different data ingestion. So, based on the condition, we can send a notification to a specific team for issue resolution.

✅ Best Practices for Managing Data Ingestion Issues

1. Set Up Monitoring & Alerts

- Use automated tools or alert mechanisms (like email or Slack) to notify you immediately when an ingestion fails.

- Monitor both batch and streaming ingestion jobs regularly.

2. Validate Data Before Upload

- Ensure your data files are clean, complete, and in the correct format before sending them to Salesforce Data Cloud.

- Check for null values, missing fields, or incorrect data types.

3. Use Consistent File Naming Conventions

Maintain a naming standard for files so it’s easier to track what was ingested and when. You can put an external system’s short name, type of ingestion, date, and time, along with the file name. Example: Batch_S3_CustomerIngestion20250708.csv or Stream_S3_CustomerIngestion20250708.csv etc.

4. Keep Source Systems Stable

Ensure APIs, data connectors, and file transfer mechanisms from source systems are reliable and well-maintained.

5. Log and Track Failures

Maintain a failure log for debugging and audits. Include timestamps, source, error type, and resolution steps so that it can be used by admins for audit purposes.

6. Retry with Caution

Implement retry logic with a delay in case of failures, especially for real-time or streaming data. This is important, but it depends on the business requirements. So discuss with the stakeholders for implementations.

7. Enable Data Deduplication

Prevent duplicate records by using identity resolution or unique keys during ingestion. This is the most important factor; we should avoid duplicate records in Data Cloud; otherwise, all our analytics and reports will be useless. Even duplicate records will add an extra cost to you.

8. Use Sandbox for Testing

Test ingestion setups in a sandbox before moving to production to avoid unexpected issues. This will reduce errors of invalid data ingestion.

9. Document Data Flow

Keep a clear map of all data sources, ingestion methods, and schedules to simplify troubleshooting. This will help in maintaining the ingestion easily.

10. Work with Stakeholders

Regularly communicate with data providers and business teams to align on data expectations and issues.

Related Posts

- Why Salesforce Data Cloud is the Future of Customer Engagement?

- Unlocking the Power of Salesforce Data Cloud: The Future of Customer 360

Recents Posts

- The Ultimate Salesforce Implementation Checklist: A Step-by-Step Guide

- Ingest Data in Salesforce Data Cloud using Data Ingestion API

- How to Set Up Email Alerts for Salesforce Data Cloud Ingestion Failure

- Why Salesforce Data Cloud is the Future of Customer Engagement?

- Top 10 Features of Salesforce Sales Cloud Summer’ 25